In the world of software development, the old adage "you can't improve what you don't measure" has never been more relevant. Gut feelings and anecdotal evidence are no longer enough to steer a modern engineering organization. Today, high-performing teams thrive on data, using real-time insights to optimize their workflows, improve quality, and accelerate delivery.

But what do these teams actually measure?

At keypup.io, we're in a unique position to answer that question. Our platform provides an AI assistant that helps engineering leaders, product managers, and developers get instant answers from their development data. By analyzing thousands of these anonymized real-world queries, we’ve identified the most pressing topics on the minds of software professionals.

Here are the top 5 areas of the Software Development Lifecycle (SDLC) that teams are analyzing most, complete with real examples of prompts our users have entered.

1. Developer Productivity and Contribution

SDLC Context: In a collaborative environment, understanding individual and team output is not about micromanagement; it's about visibility, recognition, and workload balancing. As teams grow and remote work becomes standard, leaders need objective ways to see who is contributing, who might be blocked, and where mentoring opportunities lie. The trend is moving away from simplistic metrics like lines of code towards more holistic measures like pull request (PR) throughput and commit activity.

Prompts Topic: This was the most frequent topic, focusing on quantifying the output of individual developers. Users want to see who is contributing the most work, measured by metrics like the number of merged pull requests, lines of code (LOC), and commit frequency. These requests often include filtering out specific team members or bots to focus on external or specific contributors.

How Keypup Helps: By integrating with Git providers (like GitHub, GitLab) and project management tools (like Jira), Keypup builds a complete picture of every contribution. Our AI assistant makes it easy to generate detailed reports that would otherwise require complex manual data wrangling.

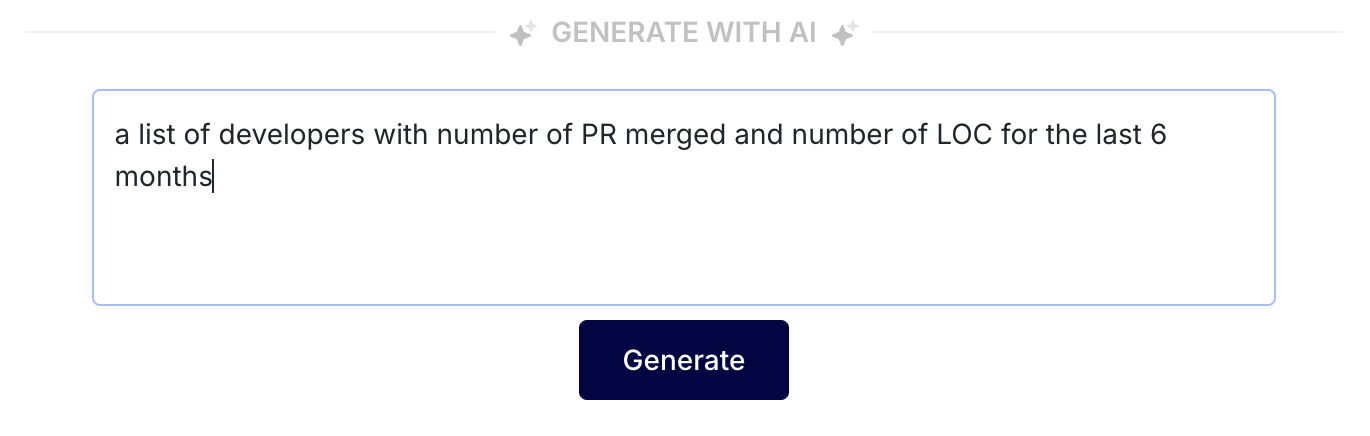

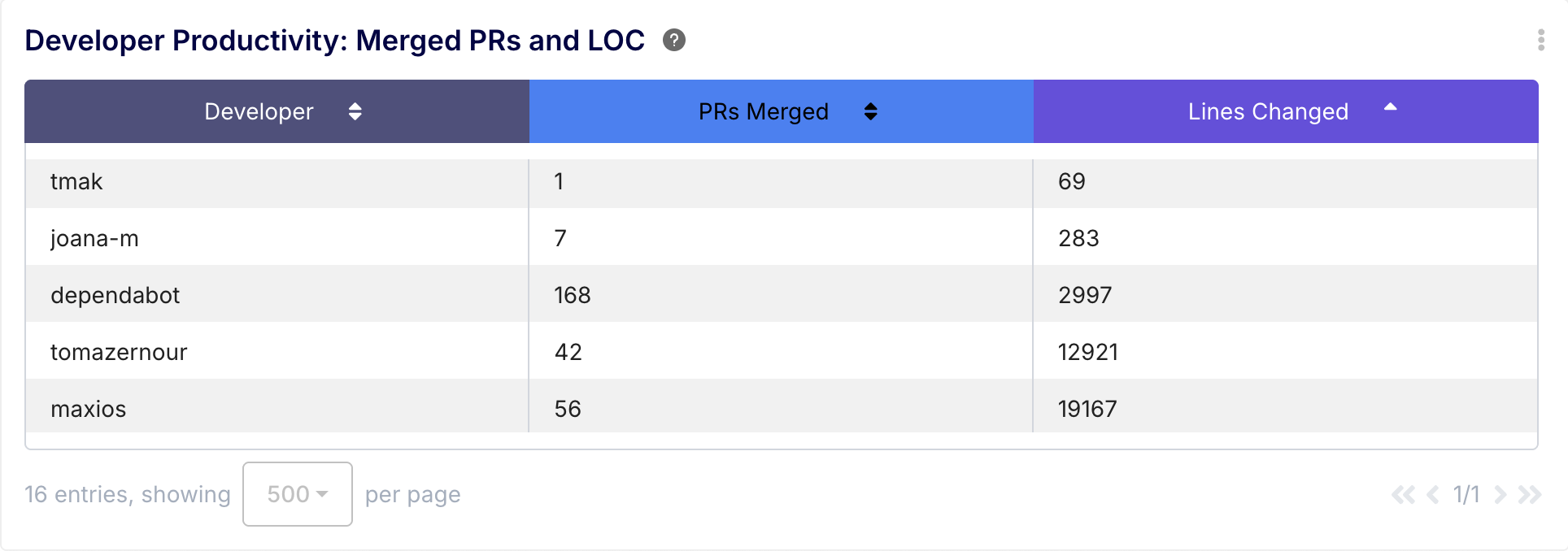

Example 1: Measuring Merged PRs and Lines of Code

User Prompt: "a list of developers with number of PR merged and number of LOC for the last 6 months"

AI assistant answer

Here is a list widget displaying the number of merged pull requests and the total lines of code changed by each developer over the last 6 months.

- The report is based on the Issues & Pull Requests dataset.

- Dimension: The primary grouping is by Developer (

author_username).

Metrics:

- Merged PRs: This metric counts the number of pull requests that have been merged by each author.

- Lines of Code: This metric sums the total Lines changed (

lines_changed) for all merged pull requests by each author.

Filters:

- The data is filtered to include only Pull Requests (

type is 'pull_request') that are in the MERGED state. - Only data from the last 6 months (

merged_at after 6 months ago) is included to focus on recent activity.

You can click on any row in the table to drill down and see the individual merged pull requests that contribute to the selected developer's metrics.

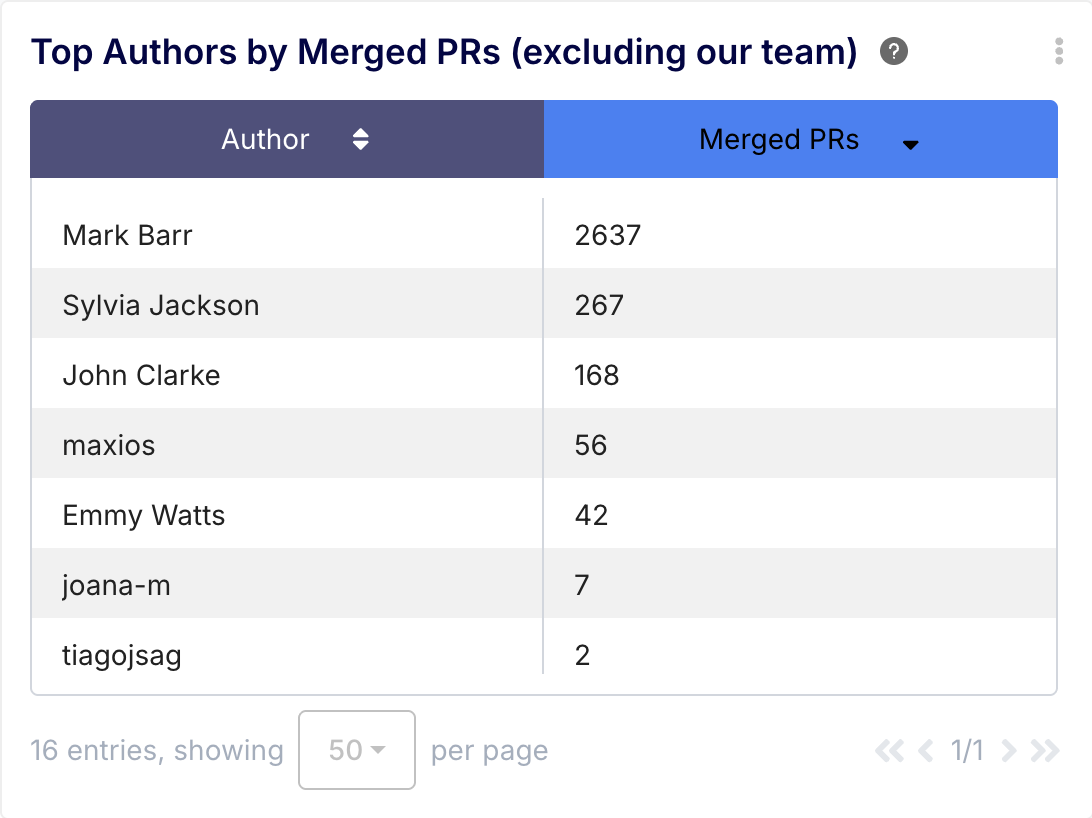

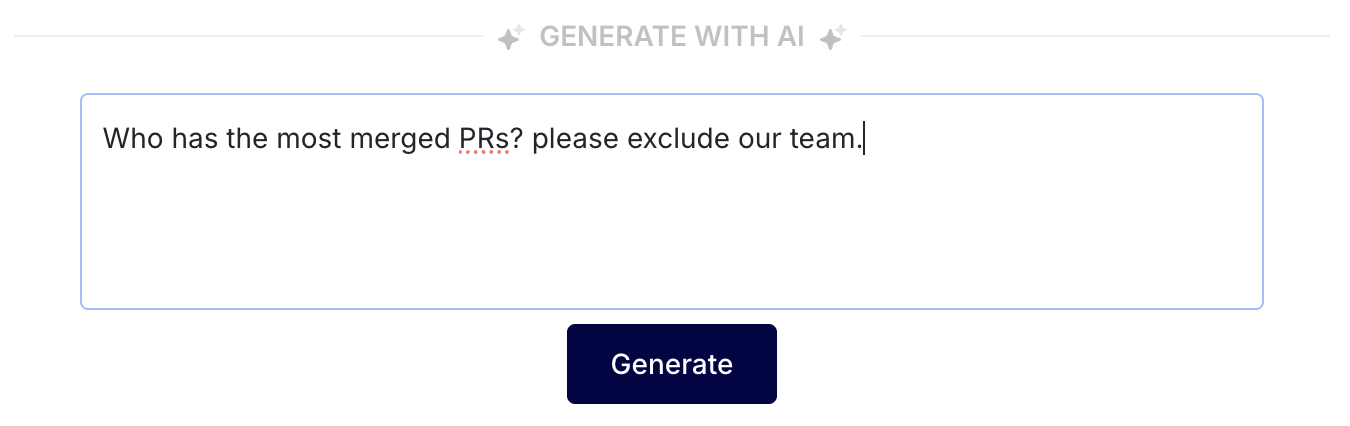

Example 2: Focusing on External Contributors

User Prompt: "Who has the most merged PRs? please exclude our team."

AI assistant answer

Here's an insight that displays a list of authors with the most merged Pull Requests. It excludes specific team members, and you can customize this filter to specify your team's usernames.

- Dataset: This insight uses the Issues & Pull Requests dataset.

Dimensions:

- Author: The

author_username field is used to group the data by the author of the pull request.

Metrics:

- Merged PRs: A

COUNT of _system_id is used to count the number of pull requests. This metric is sorted in descending order to show who has the most merged PRs.

Filters:

- The

type is set to pull_request to only include pull requests. - The

state is set to MERGED to count only merged pull requests. - The

merged_at field is filtered to include only pull requests merged in the last 12 months. - The

author_username field uses a NOT_IN operator with placeholder values "team_member_1","team_member_2". You should update this filter to include the actual usernames of your team members that you wish to exclude.

2. Bug Tracking, Triage, and Quality

SDLC Context: Software quality is a direct reflection of the development process. Teams are no longer just counting the number of bugs; they are digging deeper to understand their impact and origin. The modern need is to differentiate actionable defects from "noise" like user errors or non-reproducible reports. This allows teams to focus engineering resources where they matter most and refine their QA processes.

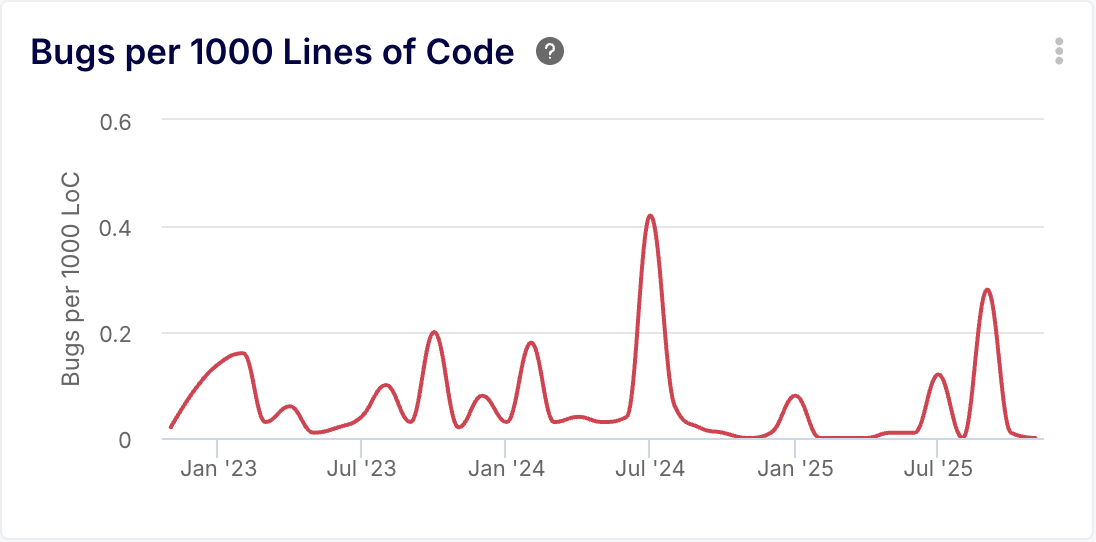

Prompts Topic: This topic centers on understanding the volume, density, and nature of bugs. Users frequently ask to measure bug trends over time, calculate bug density relative to code volume (bugs per thousand lines of code), and, most notably, differentiate between actionable bugs and those closed for reasons like "not reproducible" or "user error."

How Keypup Helps: Keypup's flexible filtering and custom formula engine allow teams to create sophisticated bug management dashboards. The AI can instantly generate insights that categorize bugs based on labels, tags, and other metadata from your tools.

Example 1: Measuring Bug Density

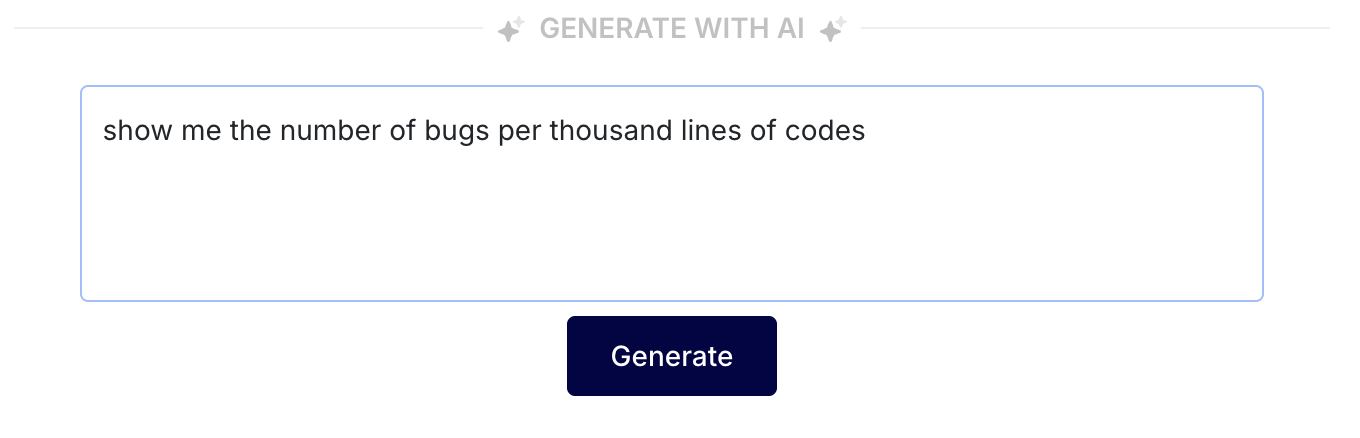

User Prompt: "show me the number of bugs per thousand lines of codes"

AI assistant answer

I can help you with that! I've created a new Line Chart insight displaying the number of bugs per thousand lines of code. This metric is calculated by dividing the total count of items (issues and pull requests) labeled as 'bug' by the total number of lines changed, then multiplying by 1000. I've also set it to display data from the last 30 months to give you a recent overview.

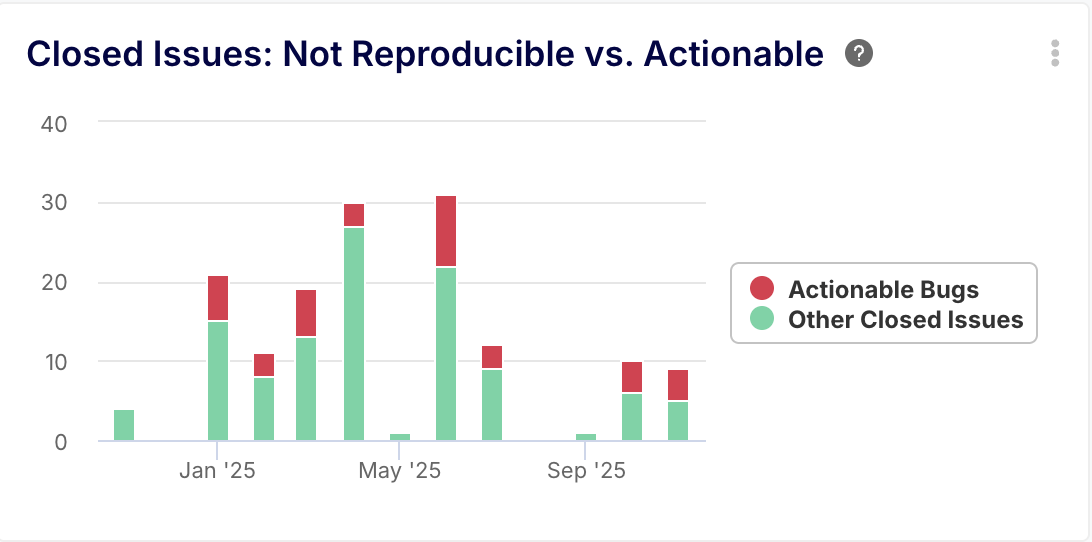

Example 2: Differentiating Actionable Bugs from Noise

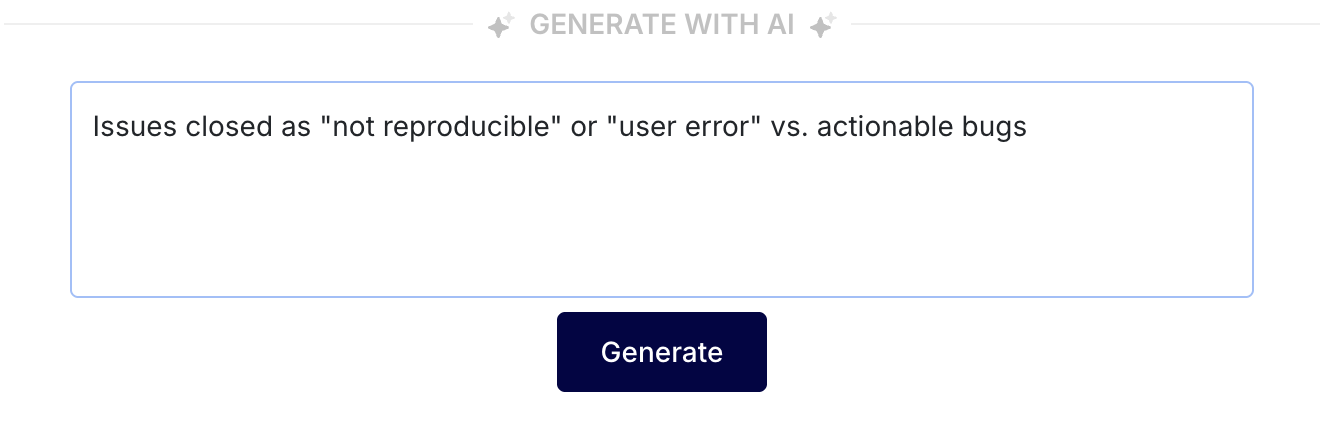

User Prompt: "Issues closed as "not reproducible" or "user error" vs. actionable bugs"

AI assistant answer

To achieve this, we will create a Stacked Column Chart that breaks down closed issues into these two distinct categories over time. The chart will help you visualize the trends in each category.

Here's how the new widget is configured:

- Name: "Closed Issues: Not Reproducible vs. Actionable"

- Dataset: Issues & Pull Requests (

INBOX_ITEMS) as it contains both issue details and their labels. - Chart Type:

STACKEDCOLUMNCHART is chosen to clearly show the count of each category and their proportion within the total closed issues each month.

Dimensions:

Closed at (X-axis): Grouped by Year + Month (YEAR_MONTH(closed_at)) to show monthly trends.

Bug Category (Stacking Dimension): A custom formula categorizes issues. If an issue's labels contain "not reproducible" or "user error," it's placed in one category. If it's tagged as a "bug" and does not have those labels, it's categorized as an "Actionable Bug."

Metrics: A simple COUNT() of _system_id to count the number of issues in each category.

3. Issue Lifecycle and Response Time

SDLC Context: The efficiency of a development team is often determined by how smoothly work flows through the SDLC. Long delays in any stage—from initial triage to final deployment—can indicate significant bottlenecks. High-performing teams are obsessed with reducing these delays. They measure metrics like Cycle Time (time spent in active development) and Lead Time (total time from creation to closure) to identify and eliminate friction.

Prompts Topic: These conversations focus on the efficiency of the development process by measuring how long it takes for work items to move through the workflow. Key metrics include cycle time (from active work to completion), lead time (from creation to completion), and time to first comment, which helps measure team responsiveness.

How Keypup Helps: Keypup automatically tracks the entire timeline of issues and PRs, making it simple to calculate complex lifecycle metrics. The AI assistant can generate trend charts that visualize whether your process improvements are actually shortening your development cycles.

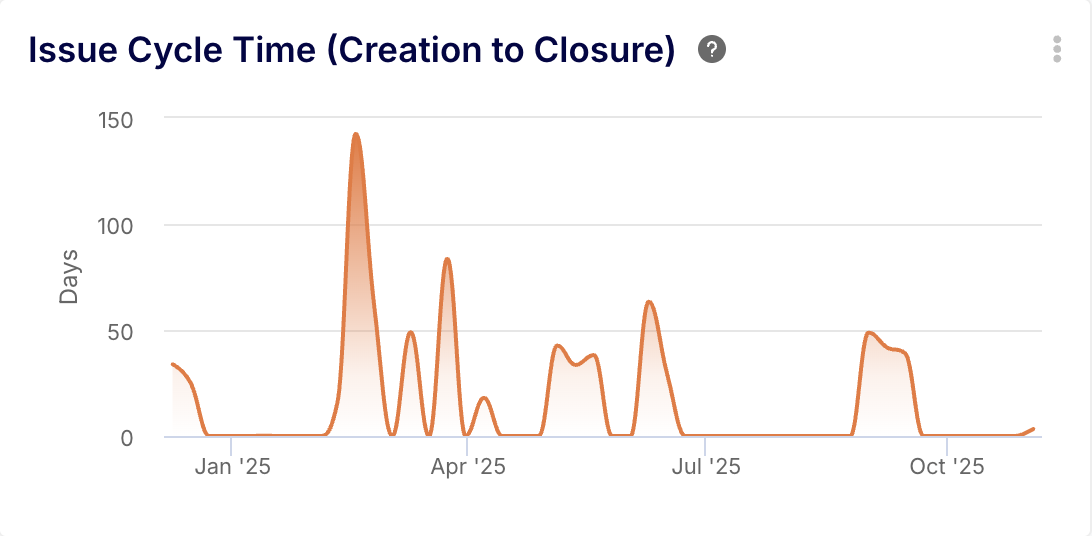

Example 1: Calculating True Lead Time

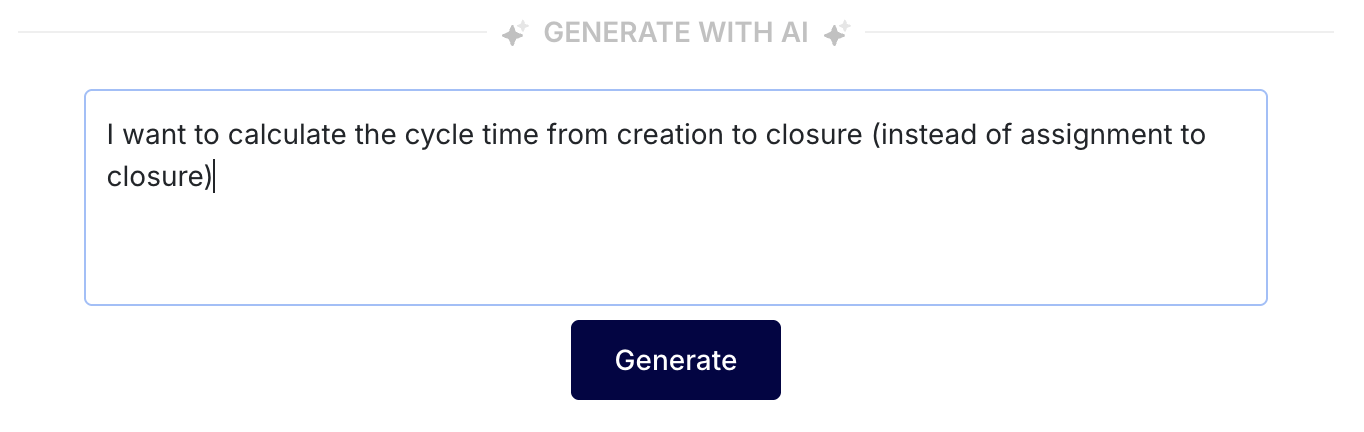

User Prompt: "I want to calculate the cycle time from creation to closure (instead of assignment to closure)"

AI assistant answer

You asked to change the cycle time calculation for the "Issue lead time for changes" insight from assignment to closure to creation to closure. I have updated the metric's formula and the primary dimension accordingly.

Specifically, I made the following changes:

- Updated the

ref for the dimension in the main series from assigned_at to created_at. - Updated the

ref for the metric formula to calculate the average time from created_at to closed_at (or NOW() if still open). - Adjusted the main filter to apply the time constraint on

created_at instead of assigned_at. - In the drilldown configuration, I updated the corresponding date dimension filter and the

LTFC (days) formula to use created_at as the starting point.

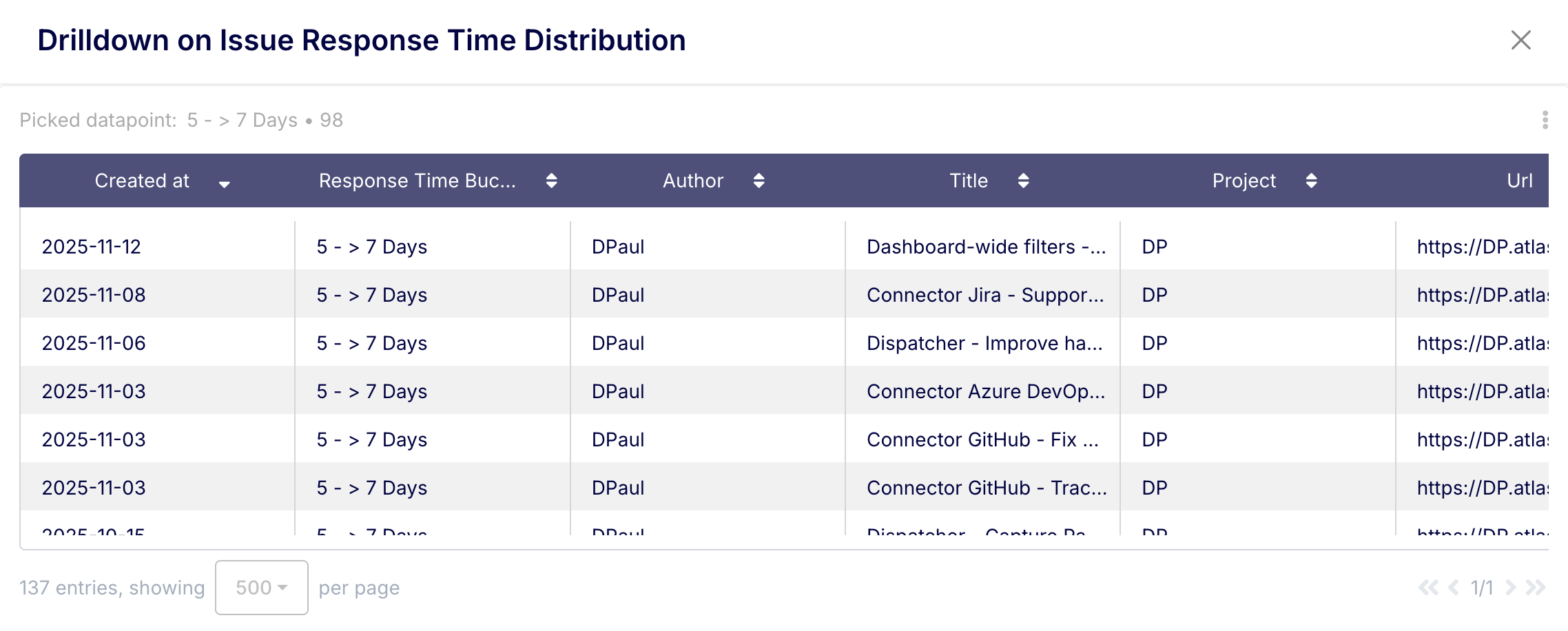

Example 2: Measuring Team Responsiveness

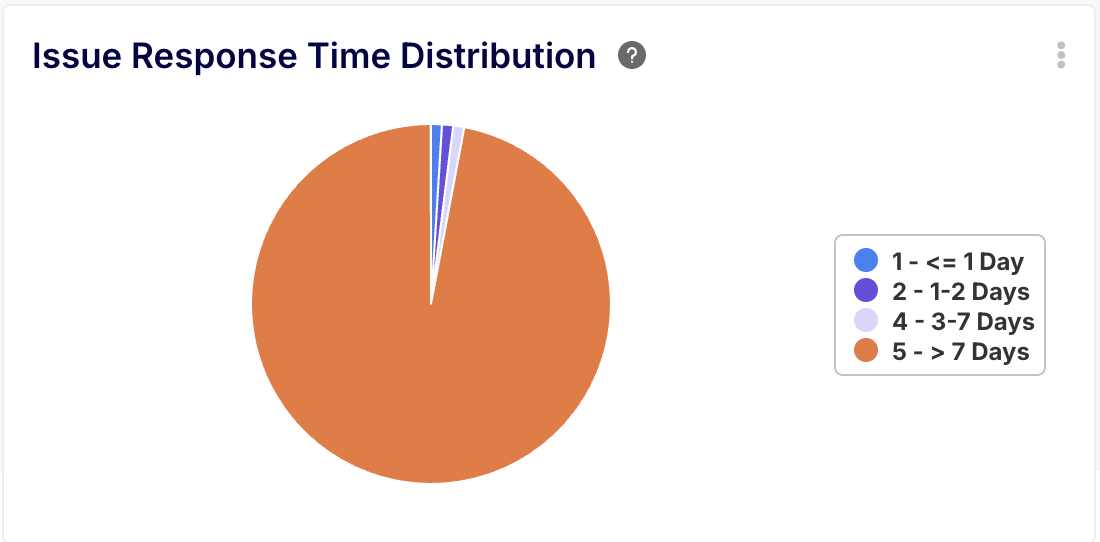

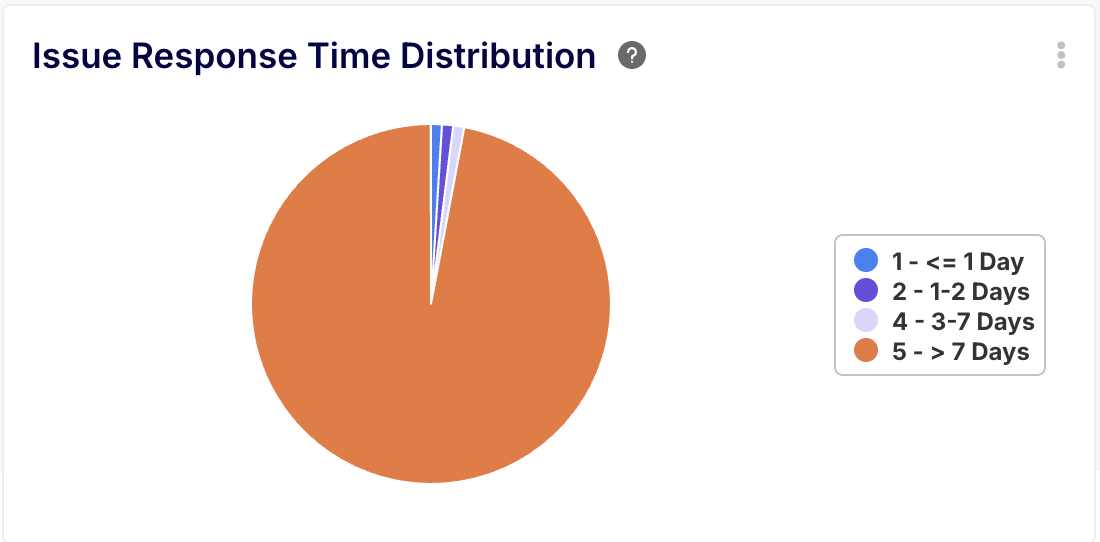

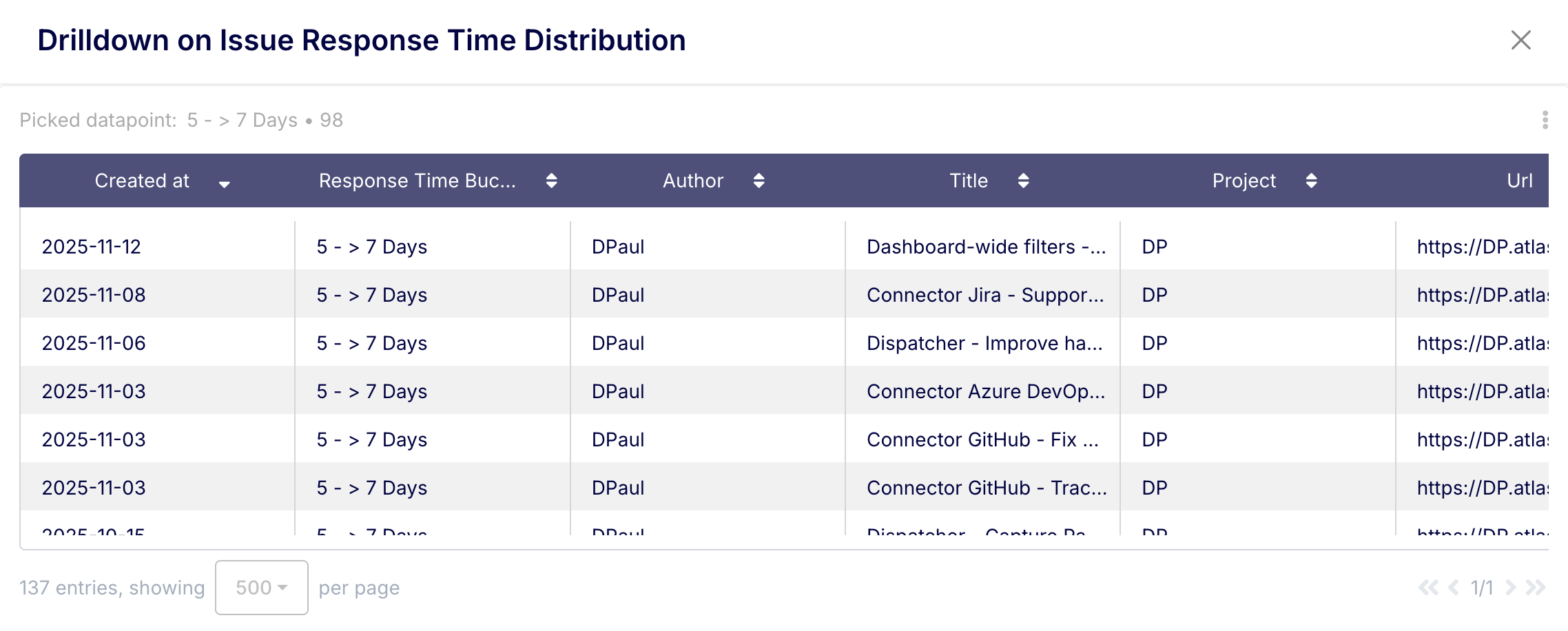

User Prompt: "can i see what percentage of issues are responded to at all by bucket? (example: 50% of issues are responded to within 1 day, 25% within 2 days, 10% within 3 days, etc)."

AI assistant answer

Here is an insight that visualizes the percentage of issues responded to within different time buckets. This Pie Chart is configured to use the 'Issues & Pull Requests' dataset and categorizes issues based on the duration from their creation to the first comment.

The insight includes the following:

- Dataset: Issues & Pull Requests (

INBOX_ITEMS) - Metric: A

COUNT() of issues (_system_id) to show the number of issues in each response time bucket. - Dimension: A custom formula calculates the response time in days (

(first_comment_at - created_at) / DAY()) and then groups these issues into predefined buckets: "Within 1 day", "Within 2 days", "Within 3 days", "Within 1 Week", and "Over 1 Week".

4. Deployment Frequency and DORA Metrics

SDLC Context: Deployment Frequency is a cornerstone of the DORA metrics, which have become the industry standard for measuring DevOps performance. It tracks how often an organization successfully releases to production. A higher frequency is strongly correlated with better organizational performance, as it indicates a stable and automated delivery pipeline.

Prompts Topic: This topic is centered on measuring the frequency of software deployments, a key DORA (DevOps Research and Assessment) metric. Users want to track the average number of deployments (represented by merges to specific branches like main or production) over various timeframes (daily, weekly, monthly) to gauge the team's ability to ship code consistently.

How Keypup Helps: By tracking every merge into key branches like main or production, Keypup provides a direct measure of deployment throughput. The AI can create KPIs and trend charts that give you an immediate understanding of your deployment cadence.

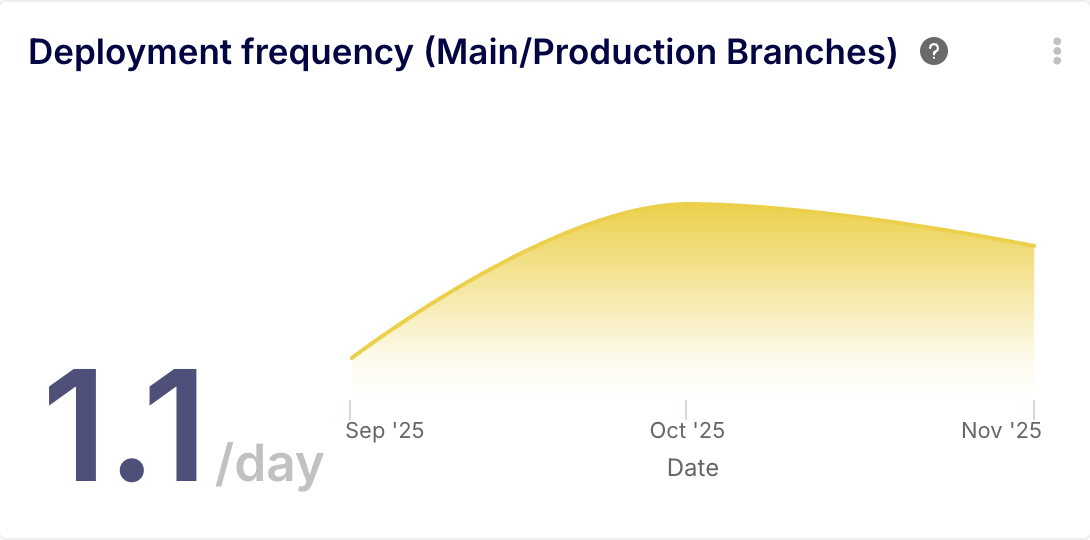

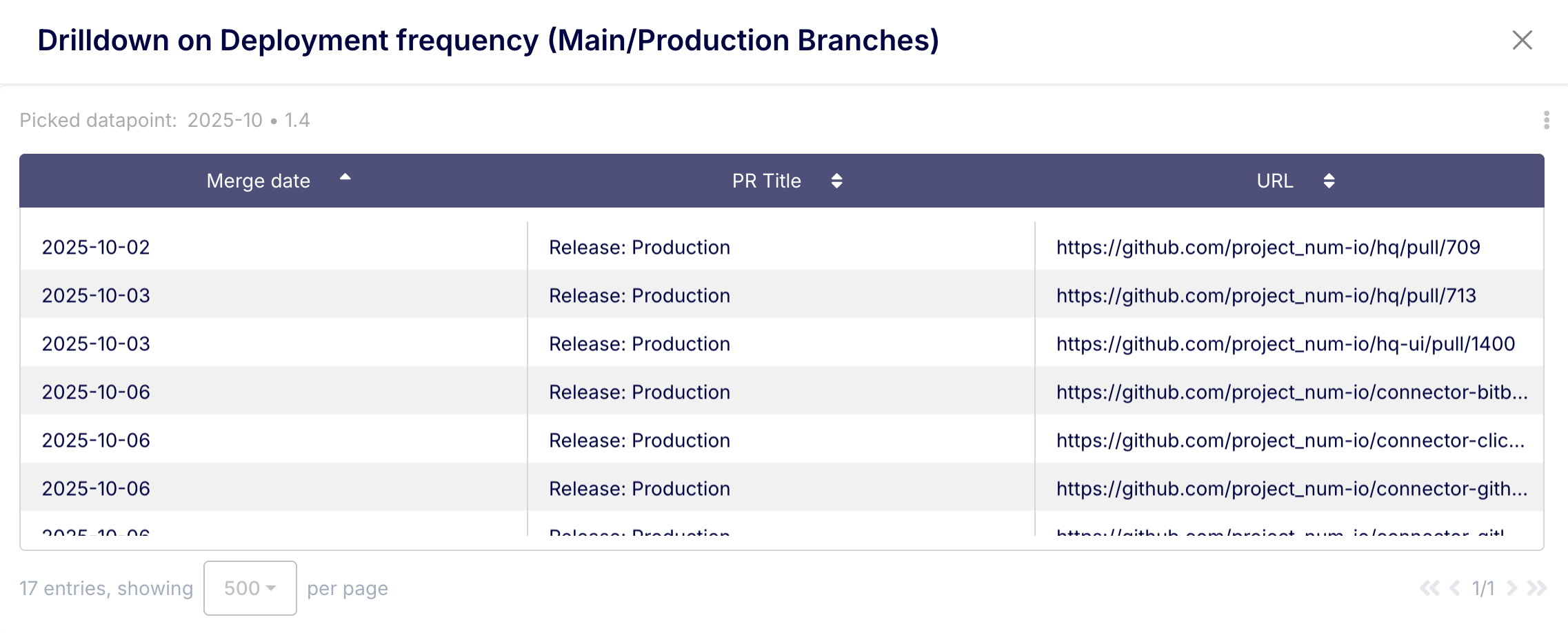

Example 1: Tracking Daily Deployments

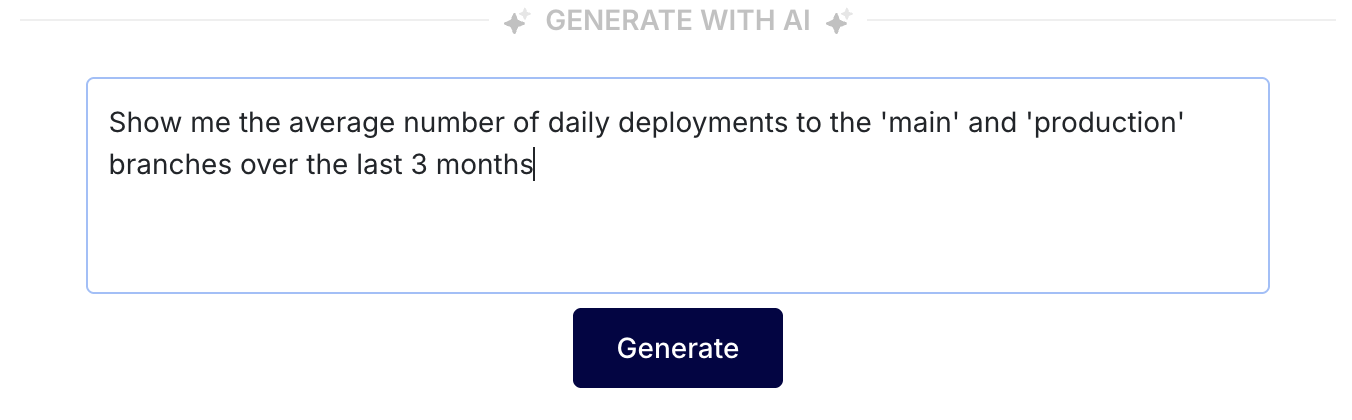

User Prompt: "Show me the average number of daily deployments to the 'main' and 'production' branches over the last 3 months"

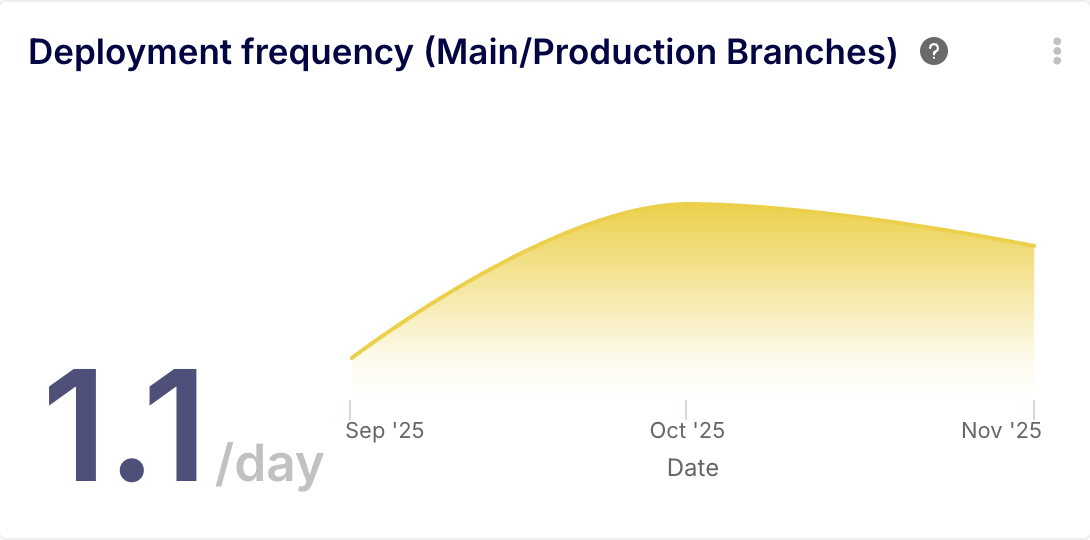

AI assistant answer

I have created a KPI with sparkline insight that shows the average number of daily deployments to the 'main' and 'production' branches over the last 3 months. The main KPI displays the overall average daily deployments for the entire period. The sparkline below it shows the monthly trend of this average. You can click on any data point in the sparkline chart to see a detailed list of the pull requests that were merged in that specific month to the 'main' or 'production' branches.

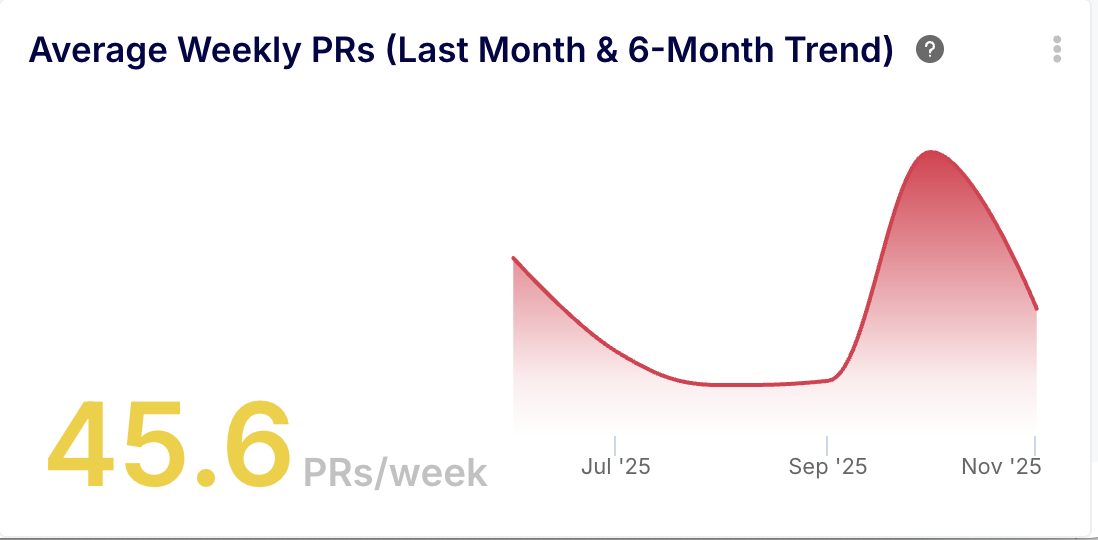

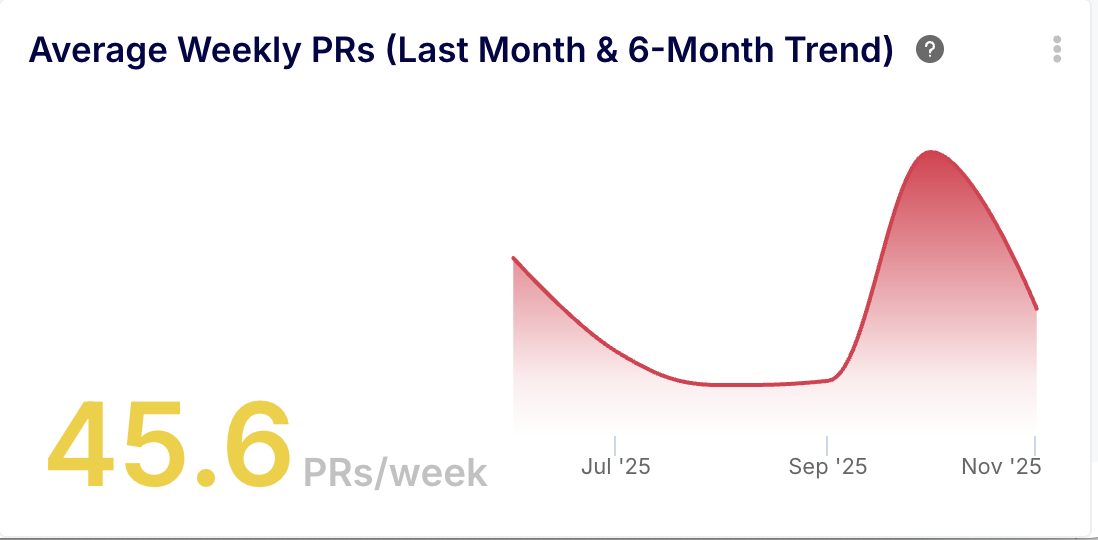

Example 2: Calculating Average Deployments Per Week

User Prompt: "I cannot seem to be able to properly obtain the number of days in each dimension's month and calculate the average of PRs per week"

AI assistant answer

To get the "average number of PRs per week" within that month, you need to:

- Count the number of relevant Pull Requests for that month using

COUNT_IF(condition). - Divide this count by the number of weeks in that specific month, which can be derived from your

d1 dimension (number of days in month) divided by 7.

I've updated the configuration to implement this logic for all three metrics (Main, Stage, and Develop) to ensure consistent calculation of the average number of PRs per week based on the actual number of days in each month shown in your dimension.

5. Team and Sprint Velocity

SDLC Context: For teams using Agile methodologies, velocity is the ultimate measure of predictability and throughput. It quantifies the amount of work a team can complete in a given iteration (e.g., a sprint). Tracking velocity helps with future planning, resource allocation, and identifying whether a team is becoming more efficient over time.

Prompts Topic: This topic focuses on measuring team-level performance, often within the context of Agile sprints. Users want to track velocity by measuring the amount of work completed in a given period. Common metrics include the number of Story Points delivered or the total number of items (like pull requests) merged per sprint or month.

How Keypup Helps: Keypup allows you to track velocity using the units that matter to you, whether it's story points, issue counts, or PRs merged. The platform’s ability to use custom fields and aggregate data across projects makes it easy to build team-specific velocity charts.

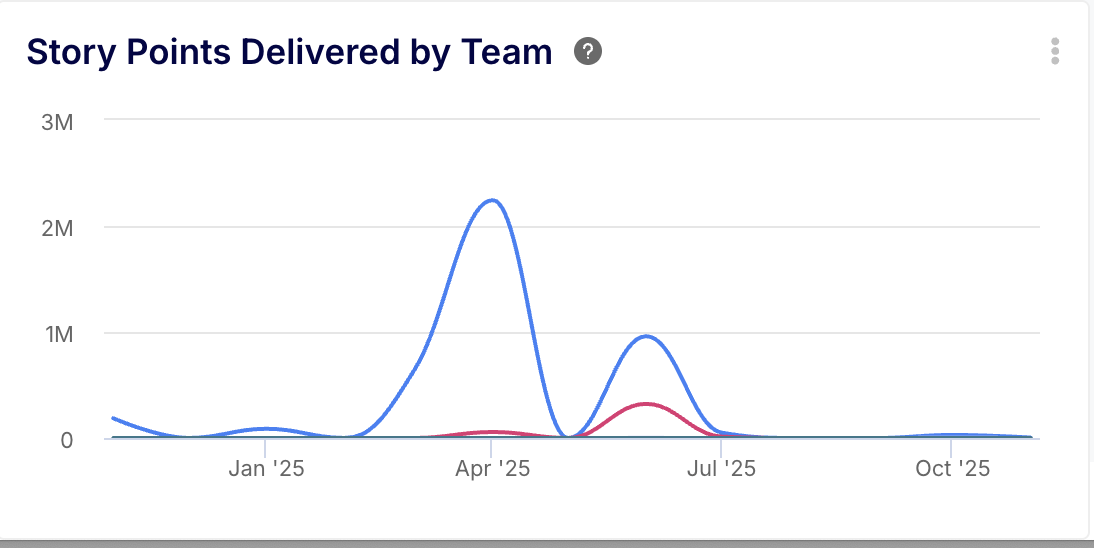

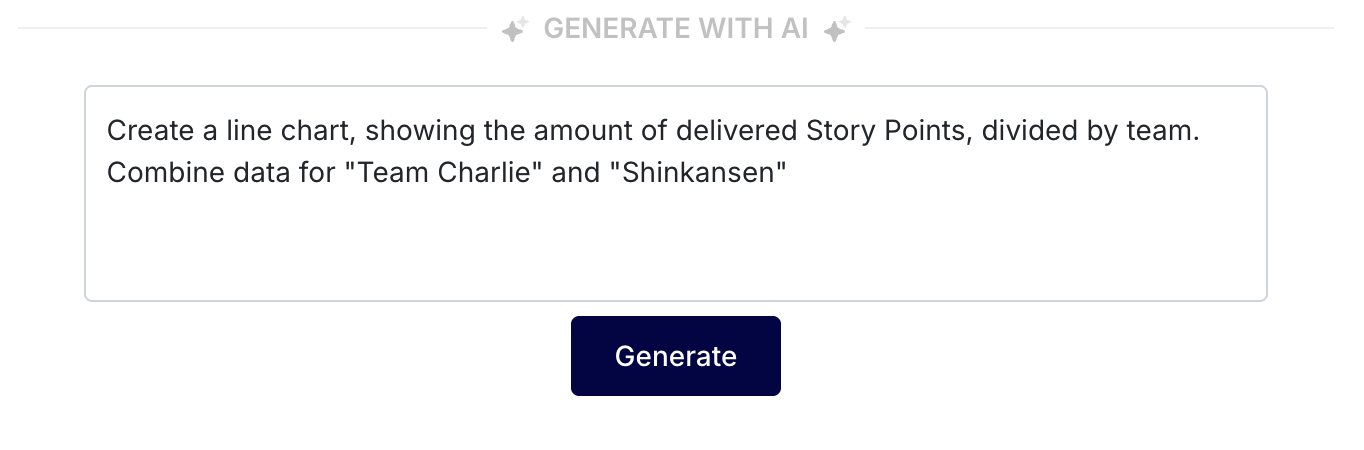

Example 1: Measuring Velocity with Story Points

User Prompt: "Create a line chart, showing the amount of delivered Story Points, divided by team. Combine data for "Team Charlie" and "Shinkansen""

AI assistant answer

I've created a line chart titled "Delivered Story Points by Team" that visualizes the sum of story points completed each month, grouped by specific teams. The chart is based on the "Issues & Pull Requests" dataset and includes data from the last 12 months.

Here's how it's configured:

- X-Axis (Dimension 1): Month, showing the

closed_at date aggregated by year and month. - Series Breakdown (Dimension 2): A custom formula

IF(CONTAINS(cf_jira_asadventure_teams___value, 'Team Charlie') || CONTAINS(cf_jira_asadventure_teams___value, 'Shinkansen'), 'Team Charlie & Shinkansen', 'Other Teams') is used to group issues. - Y-Axis (Metric):

SUM(story_points), representing the total delivered story points for each month and team group.

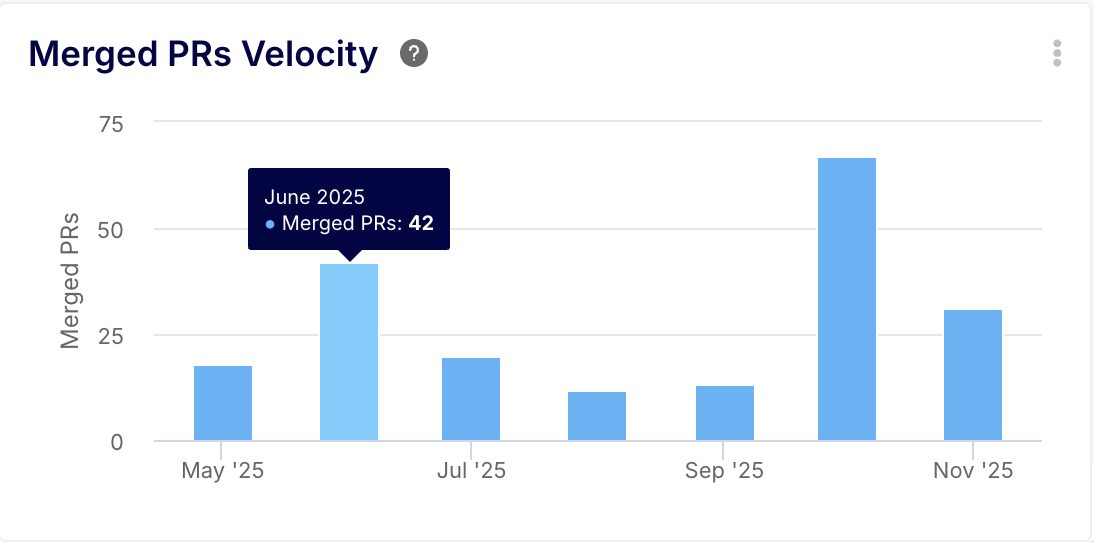

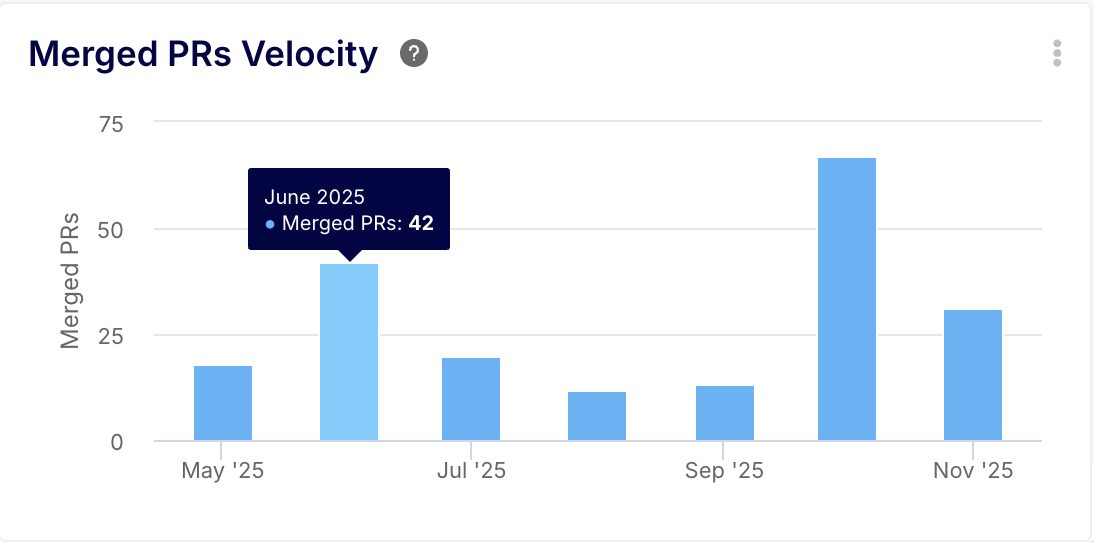

Example 2: Measuring Velocity by PRs Merged

User Prompt: "I'd like a chart to measure dev velocity based on total number of PRs merged in over the last 6 months."

AI assistant answer

This chart measures development velocity by showing the total number of pull requests merged each month over the last 6 months. It provides a clear visual trend of how many pull requests your team is completing. Clicking on any month's bar will drill down to a list of individual pull requests merged during that specific month, including details like their title, author, project, and URL.

Conclusion: From Data to Decisions

These five topics represent the core of what modern, data-driven engineering teams are focused on. They are moving beyond simple activity tracking to measure what truly matters: productivity, quality, efficiency, and throughput.

Getting these insights shouldn't be a chore. With platforms like Keypup.io, you can connect your tools in minutes and use natural language to ask the critical questions you need answered. By turning raw data from your SDLC into actionable insights, you can empower your team to build better software, faster.

Ready to get these insights for your team? Try Keypup for free and start making data-driven decisions today.